Imagine chatting with a friend, but suddenly they start adding words you never said! 🤔 That's what's happening with OpenAI's transcription tool, Whisper.

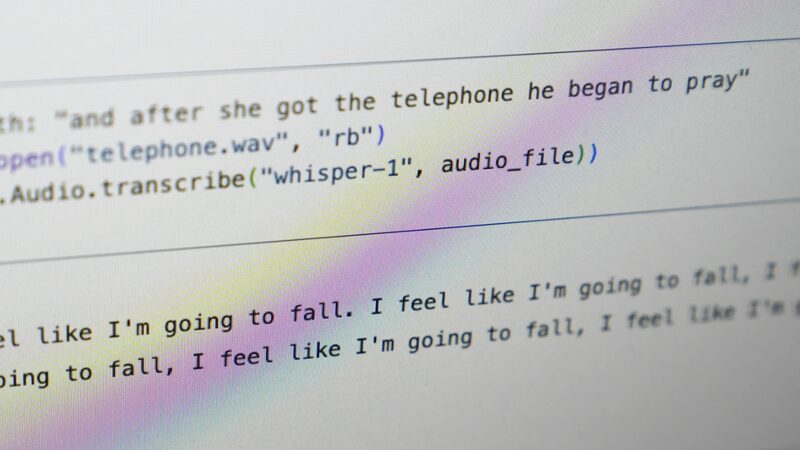

Touted as near \"human-level\" in accuracy, Whisper is turning heads in the tech world. But there's a glitch: it's been making up chunks of text—even entire sentences—that were never spoken! 😱

Experts call this phenomenon \"hallucinations,\" and it's causing quite a stir. Over a dozen software engineers and researchers have pointed out that Whisper sometimes inserts fabricated content into transcriptions, including sensitive or inappropriate remarks.

Why is this a big deal? Well, Whisper is being used across industries worldwide—from translating interviews to creating video subtitles. More concerning is its use in medical centers to transcribe patient-doctor consultations. Yikes! 🏥 OpenAI even warns against using Whisper in \"high-risk domains.\"

Researchers have found hallucinations in a significant number of transcriptions. One University of Michigan researcher spotted them in 8 out of every 10 audio files! Even in clear audio snippets, errors pop up frequently.

With AI becoming more integrated into our lives, it's crucial to be aware of these hiccups. After all, nobody wants words put in their mouth—literally! 🗣️

So next time you're using an AI tool, remember: it's smart, but not perfect. Stay curious and stay informed! 🚀

Reference(s):

AI-powered transcription tool invents things no one ever said

cgtn.com