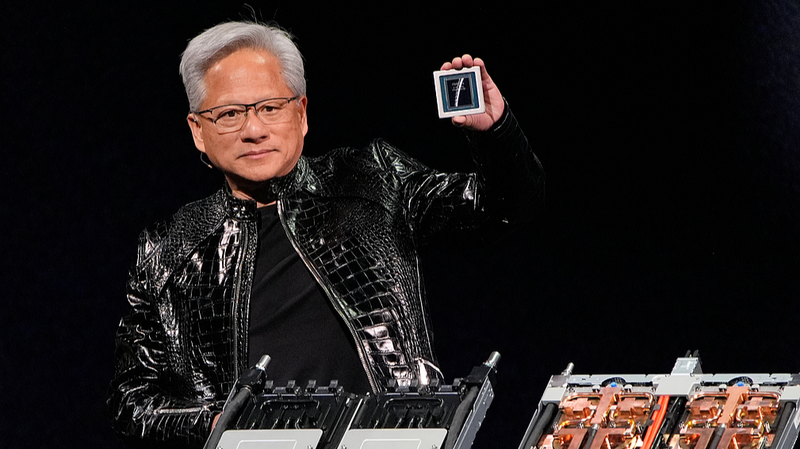

Recently at CES 2026 in Las Vegas, Nvidia’s CEO Jensen Huang dropped some exciting news: the company’s next-gen AI chips, arriving later in 2026, promise up to 5× the computing power of their predecessors, boosting everything from chatbots to complex AI apps. 🤖✨

Meet the Vera Rubin Platform

The new platform, named Vera Rubin, is built around six custom Nvidia chips. Key highlights include:

- A flagship server with 72 GPUs and 36 new CPUs

- Scalable pods linking over 1,000 Rubin chips

- 10× faster token generation for smoother AI conversations

- Proprietary data format for top performance with only 1.6× more transistors

Battle of the AI Chipmakers

While Nvidia still leads in AI model training, competition is heating up. Rival AMD and big tech player Alphabet’s Google are developing their own solutions. Nvidia is betting on:

- Context memory storage for lightning-fast chatbot replies

- Co-packaged optics in its new networking switches to link thousands of machines

Early Adopters and Beyond

CoreWeave will be the first to roll out Vera Rubin systems, and Nvidia expects major cloud providers like Microsoft, Oracle, Amazon, and Alphabet to join soon.

Driving into the Future

On top of hardware, Huang unveiled Alpamayo, open-source software (and its training data) to help self-driving cars make smarter decisions and leave a detailed paper trail for engineers. 🚗💡

What’s Next?

With this leap in AI chip power, businesses, developers, and startups—everywhere from Silicon Valley to Latin America—can look forward to faster, smarter applications. Get ready for a new era of AI innovation! 🚀

Reference(s):

Nvidia CEO Huang says next generation of chips in full production

cgtn.com